Supermicro widest range of GPU-enabled SuperServers now ready to support the new addition to the NVIDIA® Tesla® Accelerated Computing Platform, the NVIDIA® Tesla® M40 GPU Accelerator

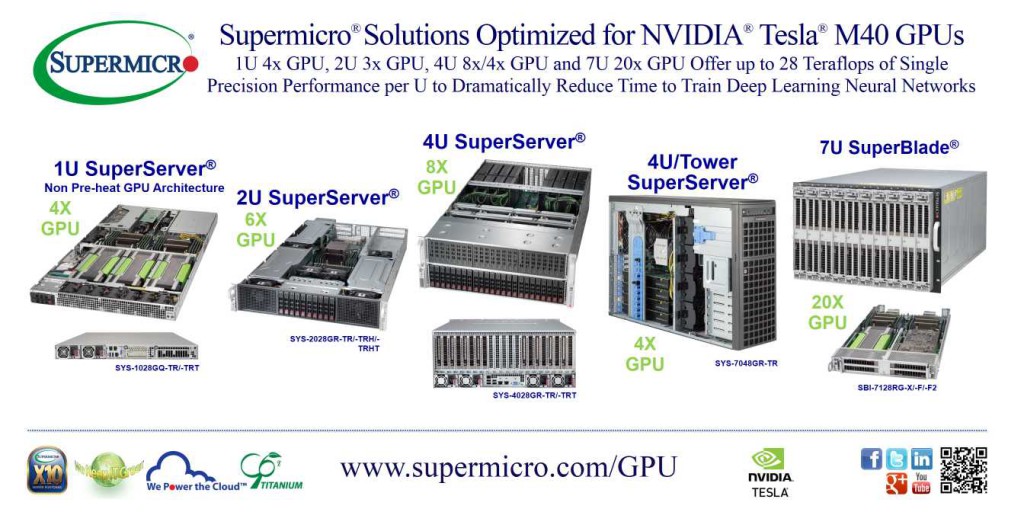

Available immediately, the Supermicro 1U 4x GPU (SYS-1028GQ-TR/-TRT), 2U 6x GPU (SYS-2028GR-TR/-TRH/-TRHT), 4U 8x GPU (SYS-4028GR-TR/-TRT), 4U/Tower 4x GPU (SYS-7048GR-TR) and 7U 20x GPU SuperBlade® (SBI-7128RG-X/-F/-F2) offer unrivaled configuration flexibility and industry leading GPU density. With Supermicro’s GPU cooling optimized architecture in the 1U 4x GPU SYS-1028GQ-TR/-TRT, NVIDIA Tesla M40 accelerators can operate at a sustained 1000W delivering up to 28 Teraflops of single precision performance for deep learning training applications.

NVIDIA Tesla M40:

The NVIDIA Tesla M40 is purpose-built for deep learning training at scale, dramatically reducing the time required to train sophisticated neural networks. With support for the new Tesla M40 GPUs, Supermicro offers data scientists a range of high-performance, high-density accelerated platforms to advance their most challenging deep learning projects.

Within the solutions:

| Solution: | Form: | Highlight: |

| 4x GPU (SYS-1028GQ-TR/-TRT) | 1U | Supports 4x NVIDIA Tesla M40 GPUs, Dual Intel® Xeon® processor E5-2600 v3, up to 1TB ECC, up to DDR4 2133MHz; in 16x DIMMs, 4x PCI-E 3.0 x16 slots, 2x PCI-E 3.0 x8 (in x16) LP slot, dual port GbE LAN (-TR SKU), dual 10GBase-T (-TRT), 2x 2.5″ hot-swap drive bays, 2x 2.5″ internal drive bays, efficient airflow heavy duty counter-rotating fans with air shroud & optimal fan speed control, redundant 2000W Titanium Level (96%+) power supplies |

| 6x GPU (SYS-2028GR-TR/-TRH/-TRHT) | 2U | Supports 6x NVIDIA Tesla M40 GPUs, dual Intel® Xeon® processor E5-2600 v3 family, 10x 2.5″ Hot-swap SATA3 HDD Bays, 16x DIMM slots; up to 1TB DDR4 2133MHz reg. ECC memory, 4x PCI-E 3.0 x16 and 1x PCI-E 3.0 x8 slots, 2x GbE/10GBase-T (-TRT, -TRHT), 1x Video, 4x USB 3.0 (2 rear, 2 via header), 2x USB 2.0 (2 via header), Built-in Server management tool (IPMI 2.0, KVM/media over LAN) with dedicated LAN port, redundant 2000W Platinum Level (94%+) power supplies |

| 8x GPU (SYS-4028GR-TR/-TRT | 4U | Supports 8x NVIDIA Tesla M40 GPUs, dual Intel® Xeon® processor E5-2600 v3 (up to 160W), up to 1.5TB ECC DDR4, 2133MHz in 24 DIMM slots, 24 2.5″ hot-swap SAS2/SATA3 drive bays, 8 PCI-E 3.0 x16 slots (double-width), 2 PCI-E 3.0 x8 (in x16) slots, 1 PCI-E 2.0 x4 (in x16) slot, dual 1GbE/10GBase-T (-TRT), and four redundant 1600W Platinum Level (94%+) power supplies |

| 4x GPU (SYS-7048GR-TR | 4U/Tower | Supports 4x NVIDIA Tesla M40 GPUs, dual Intel® Xeon® processor E5-2600 v3 family, 8x 3.5″ Hot-swap SATA HDD Bays, 3x 5.25″ peripheral drive bays, 1x 3.5″ fixed drive bay, 16x DIMM slots; up to 1TB DDR4 2133MHz reg. ECC memory, 4x PCI-E 3.0 x16 (4 GPU cards opt.), 2x PCI-E 3.0 x8 (1 in x16), and 1x PCI-E 2.0 x4 (in x8) slot, 2x GbE, 1x Video, 2x COM/Serial, 5x USB 3.0, 4x USB 2.0, Built-in Server management tool (IPMI 2.0, KVM/media over LAN) with dedicated LAN port, redundant 2000W Titanium Level (96%+) power supplies |

| SuperBlade® (SBI-7128RG-X/-F/-F2) | 7U | 20x NVIDIA Tesla M40 GPUs in 10 Blade Servers, each supports Dual Intel® Xeon® processor E5-2600 v3 family, up to 512GB DDR4 2133MT/s ECC RDIMM in 8 DIMM slots, 1x 2.5″ SSD, 1 SATADOM, FDR 56Gb/s InfiniBand switches, 1 and 10Gb/s Ethernet switches, redundant chassis management module (CMM) and Titanium Level (96%+) 3200W and Platinum Level (95%) 3000W/2500W (N+N or N+1 redundant) power supplies with cooling fans. |