Cluster Computing

What Is Cluster Computing

A computer cluster consists of a set of loosely or tightly connected computers that work together so that, in many aspects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same tasks, which are controlled and scheduled by software.

There are several approaches offered when dealing with clustering computers:

- Clustering for redundancy and load balancing/sharing.

- Clustering for high-performance computing.

- Storage cluster.

Clustering For Redundancy And Load Balancing/Sharing:

This solution is based on two main clustering technologies: failover clusters and Network Load Balancing (NLB). Failover clusters primarily provide high availability, while Network Load Balancing provides scalability and at the same time helps increase the availability of services.

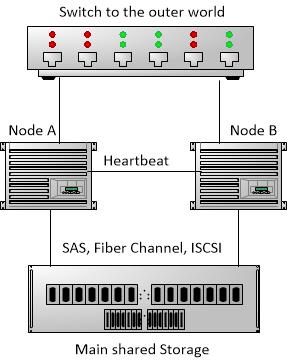

From a hardware point of view, you must have at least two nodes (computer systems) that are connected to at least one shared storage device thru SAS, fiber channel, or ISCSI interface.

The nodes must have at least two Ethernet ports. One will be connected between the nodes and will be used as a heartbeat, or a software mechanism that examines the cluster situation; the other Ethernet port will be connected to the out world.

All out world connection will be configured (as a single address) as the cluster main access point (see Figure A)

From a software point of view, applications or server applications can be spread around the nodes for load sharing and balancing. In a case of one node failure, all applications of the failed node will move automatically to the other nodes and will continue to work, so that users will be able to continue their work unaffected and retain access to their share.

Figure A: Block Diagram

A good example is the SuperMicro CIB (Cluster in a box), introducing a dual head machine with main storage that is connected to both nodes thru SAS interface and has Server 2012 r2 failover cluster manager enclosed and ready to work.

Figure B: CIB (Storage Bridge Bay Cluster In a box)

Clustering For High Performance Computing (HPC Cluster):

High Performance Computing cluster most generally refers to the practice of aggregating computing power in a way that delivers a much higher performance than one could get out of a typical desktop computer or workstation, in order to solve large problems in science, engineering, or business.

From a hardware point of view, you use as many nodes as you can in a very high resolution. Each node is preferred to have as many CPU cores with as much memory as possible.

At this point, the cluster is measured by its core count.

The next thing you must consider is the traffic between nodes which must be as quick as a bus. For that, you typically use the Infiniband protocol, that supports a very thin protocol with very high speed transportation.

At this point you have to use specific software controls that can take the entire cluster and run it as one entity by splitting applications to all cores in a parallel computing method, at the end receiving a single result.

Storage Cluster

Storage cluster is based on distributed object store and file systems designed to provide excellent performance, reliability and scalability.

From a hardware point of view, the redundancy and performance is based on a cluster of systems (which don’t have to be with the same configuration) that spreads the data evenly between the nodes, writes the same data few times between the nodes for redundancy, and accesses the data evenly between nodes for performance.

The storage cluster is usually based on two major systems. The first is a system that holds the Meta data of the storage cluster and the other is the data itself.

(For instance, an open source storage system called CEPH was designed especially for the cloud, but can also be used as a main storage system.)

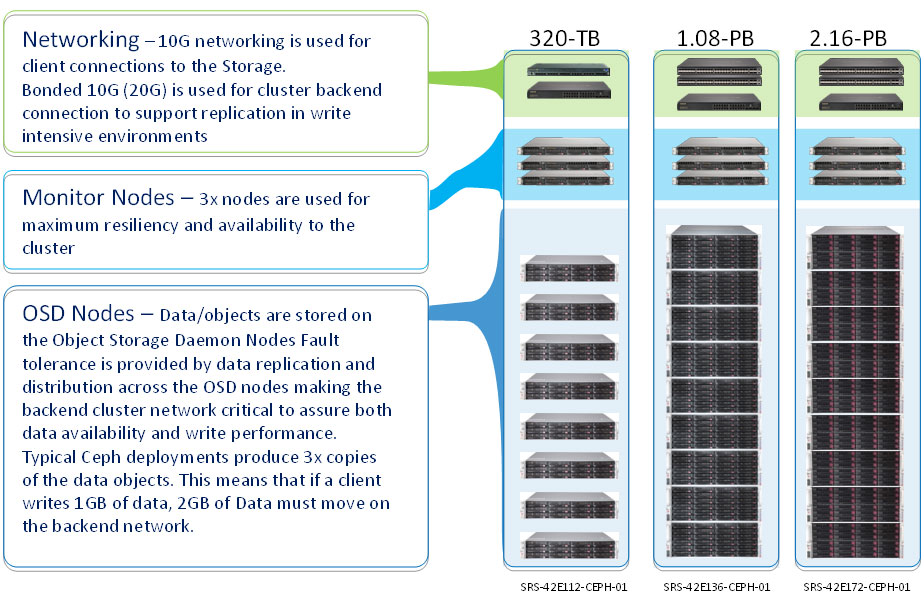

CEPH can be deployed into two major system types:

Monitor nodes that hold the data on the cluster and use a three-node system as a primary configuration.

OSD nodes which hold the data itself.

Figure C: SuperMicro CEPH solution

Internal transportation consists of bonding dual 10g, and for the out world a 10g interface is used.

SuperMicro has a range of fixed and approved building blocks for creating a secure and working CEPH storage system.

For any questions about the solutions presented here, please contact EIM esc.